Intro

When ChatGPT first launched, there was this intense desire to be able to do as much as possible with it. Writing emails? Fixing code? Just ask the genie and it should have the answer!

Over time though, you learn that maybe the genie doesn’t have all the answers but it has a decent amount that you could leverage. I want to emphasise the word leverage here because that’s what we care about. A way to multiply our impact per unit of time.

When Claude Sonnet 3.5 + Cursor launched November 2024 this was the first prototype. Now you could obtain leverage in the most important product skills: engineering. It started to dawn on me that ever day my goal isn’t to do more work: it’s to build systems that can increase the amount of leverage per unit of time every day, week and month.

I wasn’t fully sure where this obsession came from but it felt deeply intuitive. If you think back on the history of human civilisation, leverage has been reserved for the few:

In ancient Egypt, you had to be born a Pharo to have slaves that could build structures for you

A few hundred years ago, you had to be born a King or become one to command your kingdom of subjects

In more recent times, you had to be able to raise venture capital dollars to pay people salaries to get work done

However in recent times we now have to type the right keys on the keyboard to generate code that gets the work done.

Another way of putting it that the pathway to leverage has collapsed from:

Capital → Labour → Code → Leverage

Code → Leverage

That means that the following holds true: ”If I can prompt correctly, I can generate labour leverage independent of human labour or dollars”

This is an incredibly important line since it means your independence as a founder/operator increases. Scaling doesn’t mean dealing with management or speaking with investors to increase your output via capital based leverage.

The Crunch

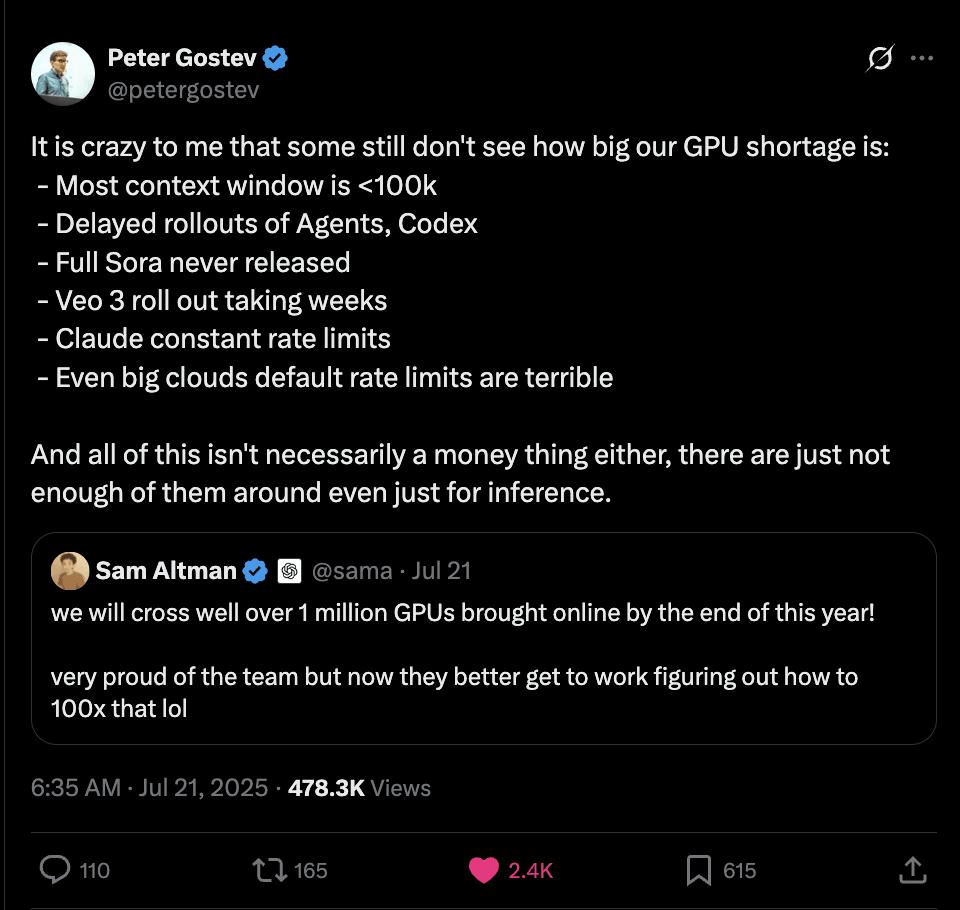

However there are no free lunches. The most probable scenario that we’re going to run into is a massive compute shortage as more people lean on this type of leverage (compute). Now this isn’t to say we don’t have enough GPUs (which everyone knows), but everything down the supply chain is going to start running into bottle necks.

Even if you wanted to build a datacenter today, you’d need to wait till 2030 to be connected to the grid! This of course opens up avenues for all sorts of other energy generation opportunities such as:

Solar

Nuclear

Natural gas

… and of course oil.

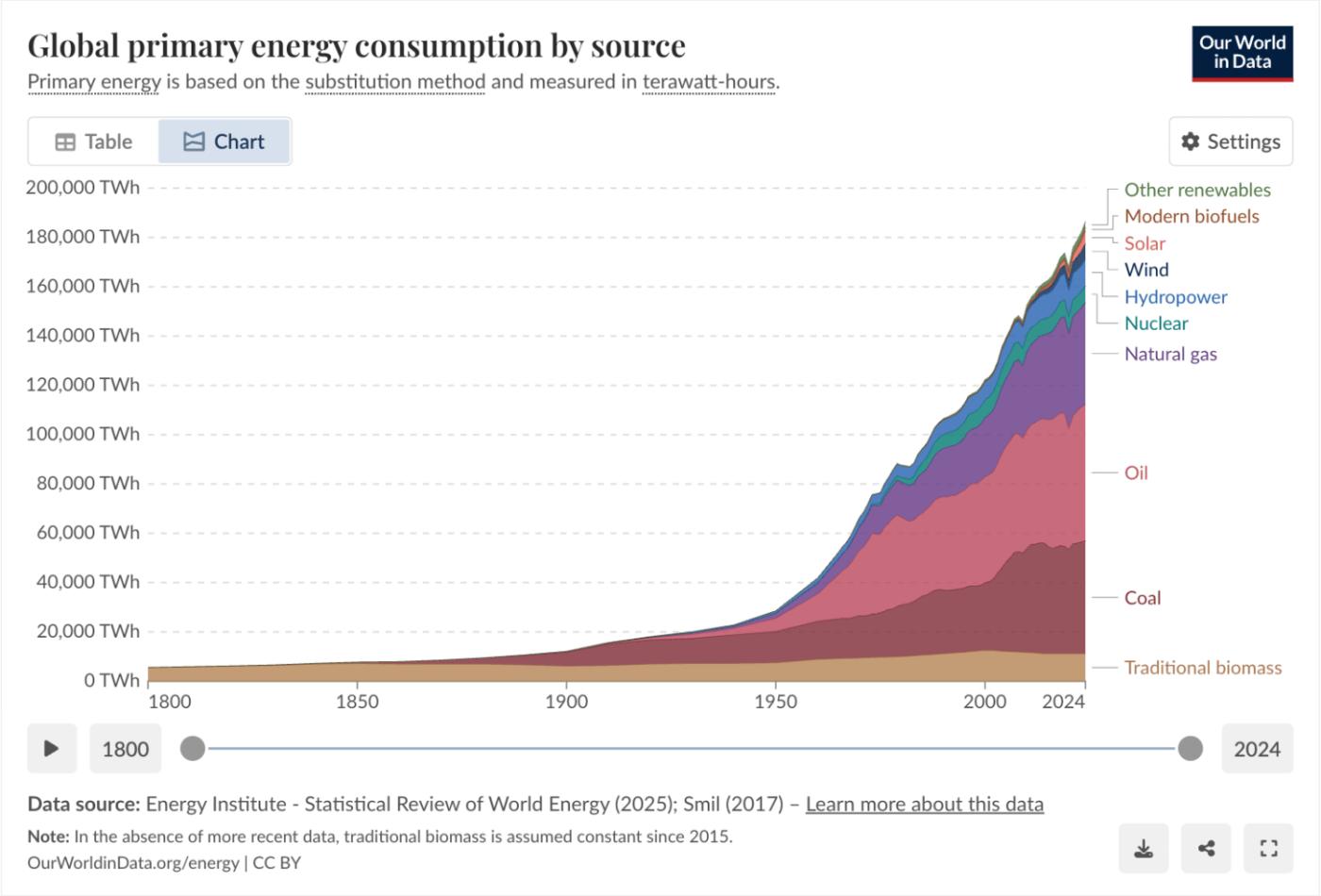

Regardless of which fighter you choose, all of the above will be required in some kind of particular mix. The least bullish avenue is traditional grid based energy delivery systems given the choke point there. Below is a chart of our current energy consumption trend and the sources that compromise it.

Solar, nuclear and other renewables are still incredibly early in terms of how much upside they have left. Oil and coal will continue to be the beasts they are but the dependency on them is needed in far more places which will most likely make them less competitive for compute based demand

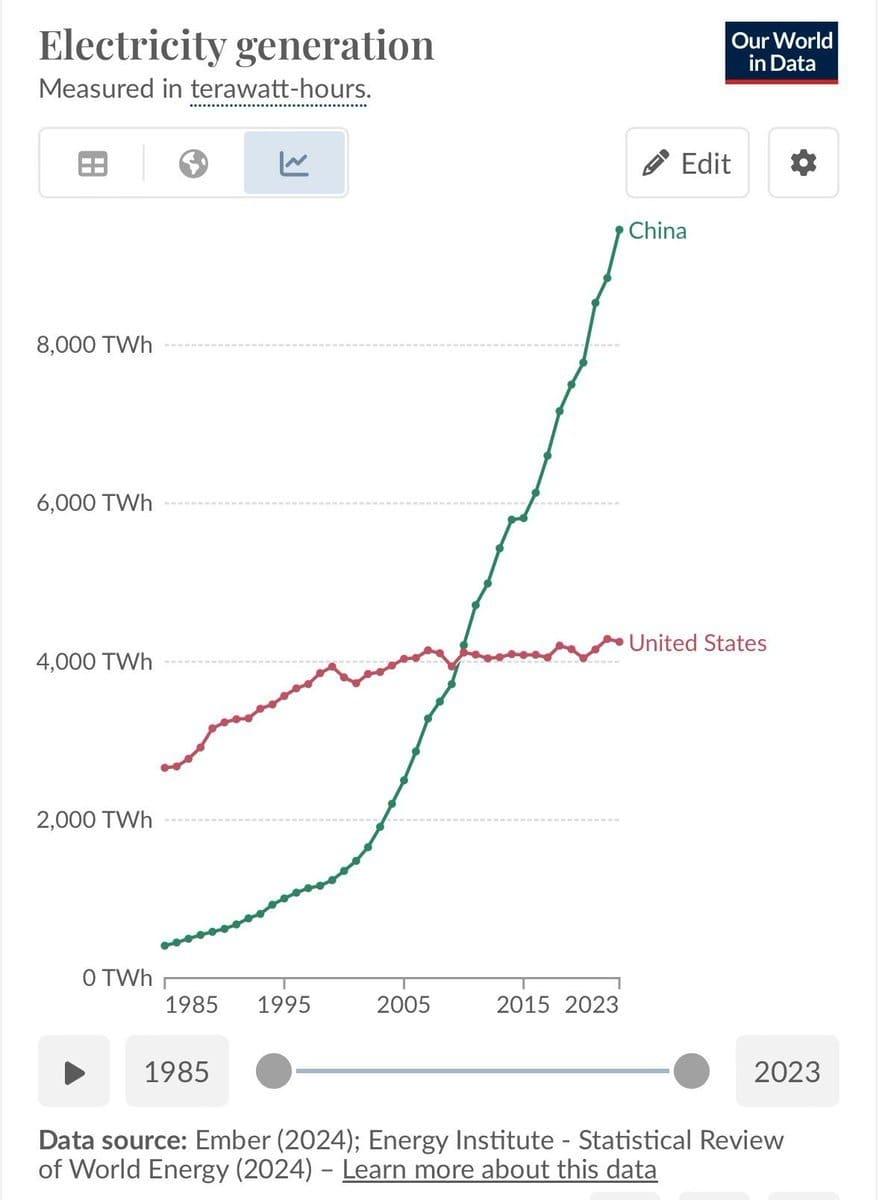

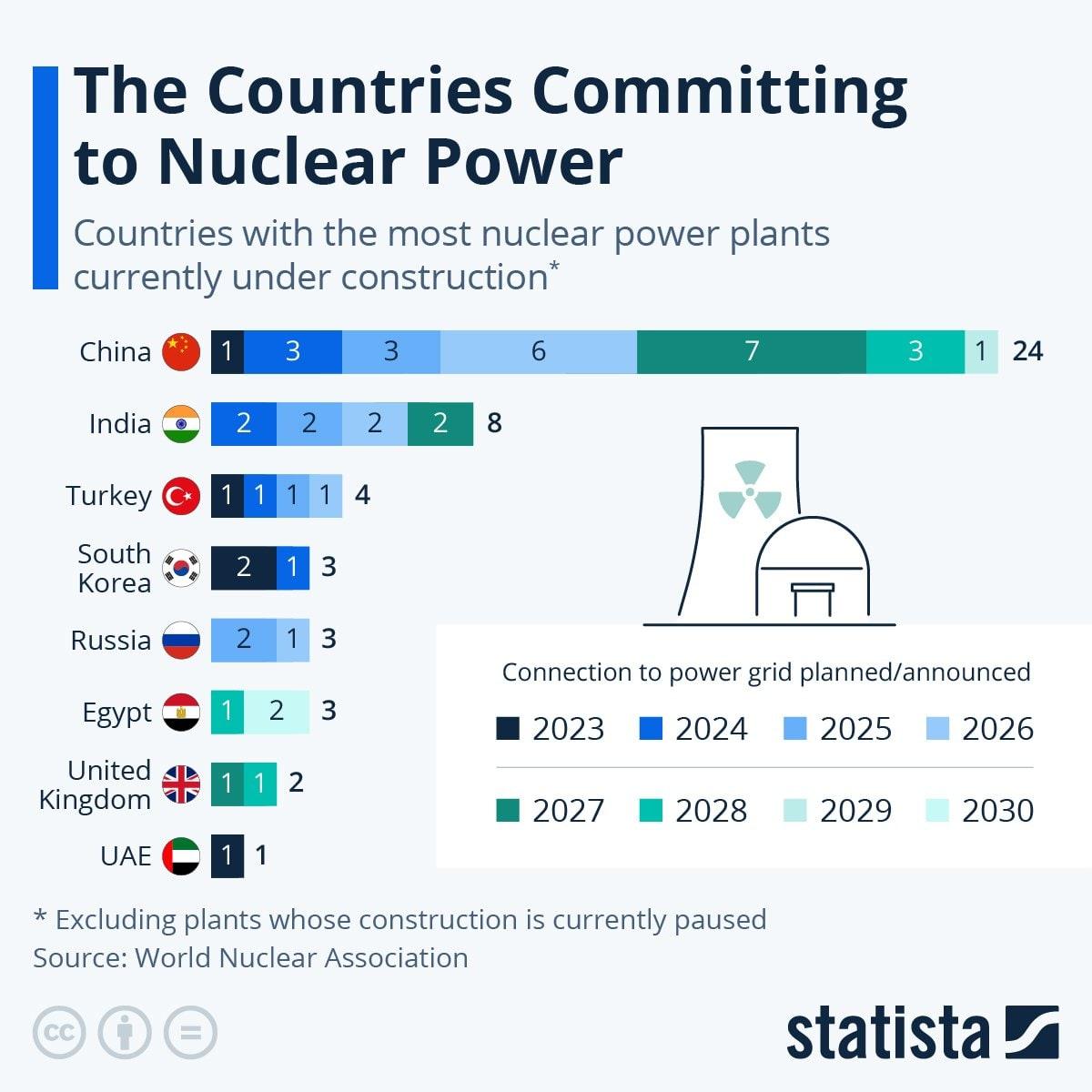

When reasoning about how different countries have been approaching energy generation, this chart blows my mind:

In case it isn’t clear, the future of energy generation lives in China, not the United States. Some will say that nuclear will come in and save us but there’s still a lot of challenges around it and time to deployment is still not very fast. Renewables like solar have low deployment time but of course have other complications (aka the sun is not always on so you need batteries/storage). Of course the question is whether the United States can “bend the curve” of electricity generation but there isn’t a lot of data to suggest so otherwise. Crusoe and other companies are doing some neat things in this area but it’s still to be seen!

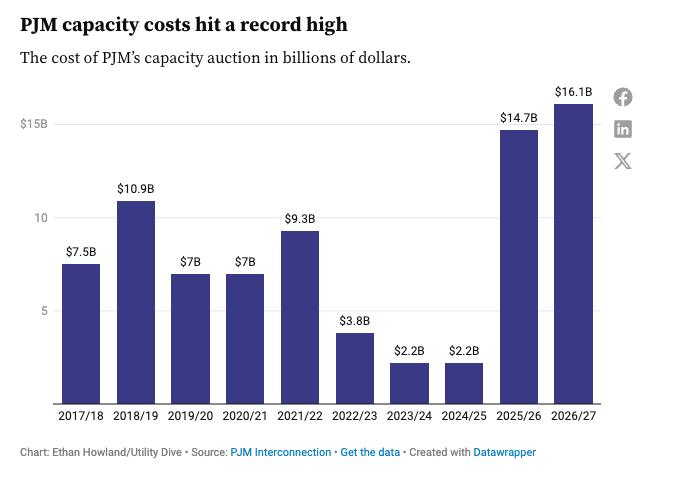

Another interesting chart is the following: PJM, which is one of the largest grid operators in the country, has seen the cost of their capacity auctions skyrocket. Last year was the real jump and this year was 22%. However relative to prior years, it paints a very clear picture around where things are heading.

LLM Attention

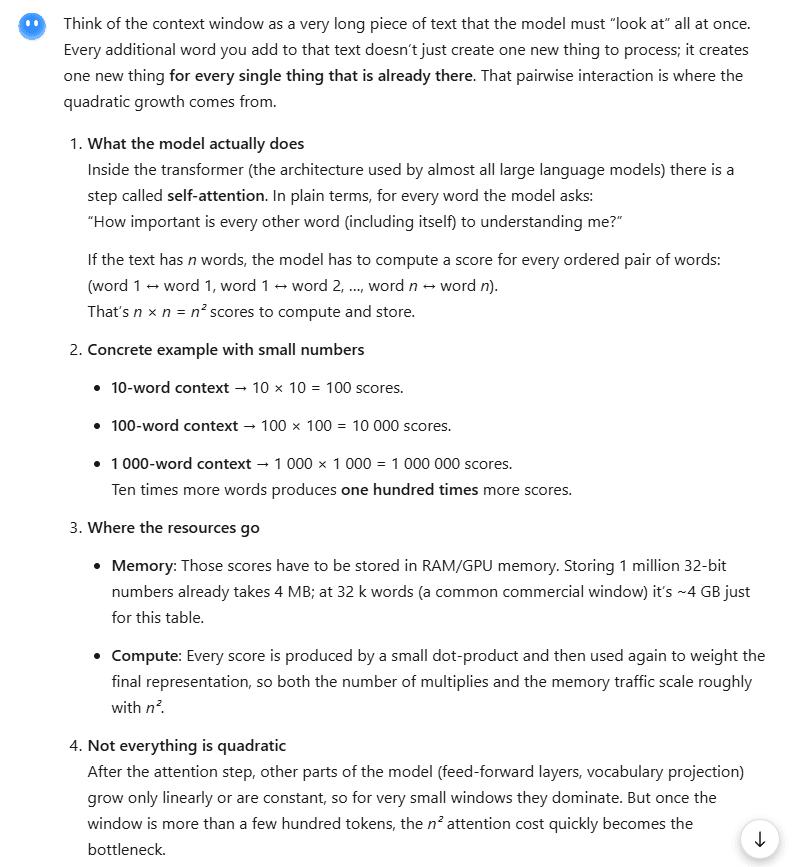

The other interesting factor here is that usage doesn’t scale linearly. My thesis here is that as people rely on LLMs, the demand for context only increases over time, not decreases. Better context means better results from whatever task you have at hand.

As context windows increase, the increase in computation resources is not linear! Take for example a LLM that increases it’s context window from 10k → 100k. You’d think that’s a 10x increase in compute resources?

Not so fast, it’s actually 100x.

What this fundamentally means is that if consumer and pro usage continues to pick up and demand picks up (people want baseline 100k context windows) then expect our compute needs to be way higher than anyone can imagine.

We’re going to need so much energy to power the future it isn’t even funny. However it does create an interesting dynamic where the highest energy generation countries will continue to obtain leverage in ways that other countries won’t be able to.

Will America come up with innovations in model advancements and China train them? Who knows.

Closing

There’s a lot to unpack and still so many factors that this piece misses out on. It’s an area I’m still researching and getting to know but it’s becoming clear that we all need to start thinking about energy and things lower down the stack that we typically didn’t have to care about given the stress that will accrue on them from our future needs are enormous.

New models of energy distribution, novel distributed compute architectures, advancements in renewable technology are going to be the biggest drivers of innovation in the next decade.

If you think the future looks anything like the past you’re not imagining hard enough. As a civilisation I believe we’re going through a massive upgrade that is so fast that we’re all collectively scrambling.

If you have any thoughts on this piece (corrections or additions), I’ve love to hear from you :)